Did You Know?

- Over 72% of QA teams are exploring or planning to adopt AI-driven testing tools in 2025.

- Gartner predicts that by 2028, over 33% of enterprise software will include agentic AI — up from less than 1% in 2024.

- The global AI agents market is expected to grow from $5.4B in 2024 to over $45B by 2030, with a CAGR of 45.8% (Grand View Research).

AI is not just assisting software testing anymore. It’s starting to run and optimize it.

TL;DR

Traditional test automation relies on coded scripts that follow exact steps.

Agentic AI testing uses autonomous agents that understand goals, generate tests, and adapt in real time.

This guide breaks down both approaches and helps you decide which one suits your QA maturity and release velocity.

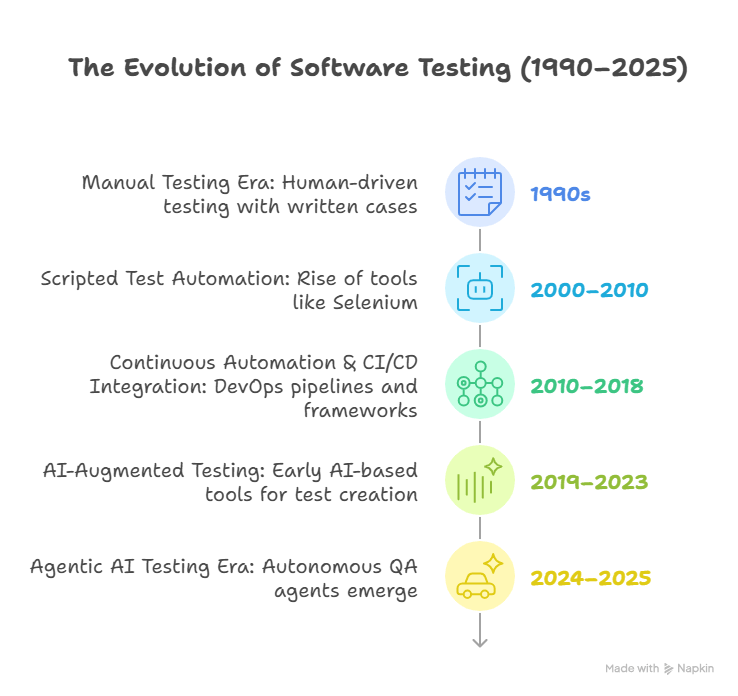

The Evolution of Software Testing

Software testing has evolved through three major phases:

| Era | Description | Limitation |

| Manual Testing | Human testers validate each step manually. | Slow, repetitive, non-scalable. |

| Traditional Test Automation | Uses frameworks like Selenium, Cypress, Playwright to execute scripted steps. | Fragile locators, high maintenance, limited adaptability. |

| Agentic AI Testing | AI agents autonomously plan, generate, execute, and heal tests. | Emerging practice; requires governance and clear ROI tracking. |

Testing has always been about efficiency.

The difference now is intelligence machines can understand, learn, and adapt testing strategies automatically.

What Is Traditional Test Automation?

Traditional automation frameworks depend on explicit instructions:

Each test script defines how to test — every click, input, and validation step.

Common Characteristics

- Hard-coded locators (XPath, CSS, ID) for UI elements.

- High maintenance whenever UI or logic changes.

- Primarily supports regression and smoke testing.

- Dependent on QA engineers or SDETs for script updates.

- Limited adaptability; can’t understand intent or context.

- Works best for stable applications with infrequent UI updates.

This method improved productivity in the 2010s but fails to scale in agile or microservice-based architectures where code changes daily.

👉 For a fundamentals refresh, explore Understanding Test Cases in Software Testing.

What Is Agentic AI Testing?

Agentic AI testing is an AI-first testing approach powered by autonomous agents that plan, execute, and optimize test cases.

Instead of following fixed scripts, the agent understands the purpose of a feature and determines how to test it dynamically.

Key Characteristics

- Goal-Oriented: Agents focus on validating outcomes, not reproducing steps.

- Self-Healing: When an element changes, the agent updates it automatically.

- Multi-Modal Understanding: Interprets PRDs, UI layouts, APIs, or logs.

- Continuous Learning: Adapts to product behavior and usage analytics.

- No-Code or Low-Code: Human testers can create tests in plain English.

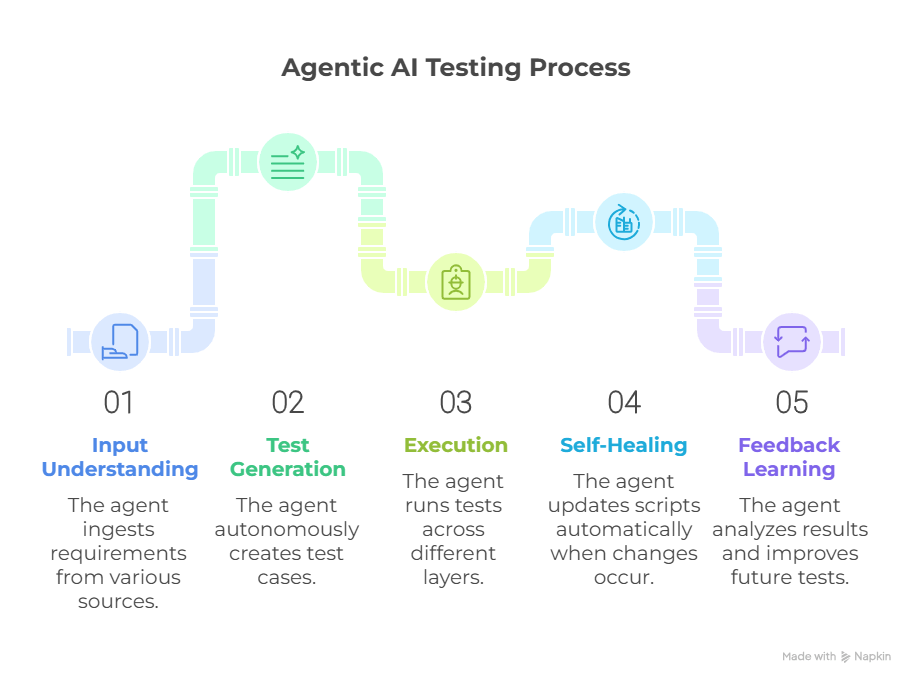

How It Works (Simplified)

- Input Understanding: The agent ingests requirements — PRDs, design files, user stories.

- Test Generation: It creates test cases autonomously using contextual reasoning.

- Execution: It runs across layers — UI, API, database, integrations.

- Self-Healing: When a UI or flow changes, the agent updates scripts automatically.

- Feedback Learning: Results are analyzed, and future tests improve automatically.

Read also: Agentic AI Testing: How Intelligent QA Is Transforming Software Development

This makes agentic AI testing one of the most adaptive and cost-efficient AI-based test automation tools available today.

Key Use Cases for Agentic AI Testing

| Use Case | Description | Business Value |

| UI Regression Testing | Vision/semantic healing of broken locators and flows. | 70–90% less maintenance. |

| API Testing | Auto-generates contract, boundary, and integration checks from Swagger/OpenAPI. | Faster backend validation; fewer integration defects. |

| Exploratory AI Testing | Learns from telemetry and user paths to create new test scenarios. | Expands coverage intelligently. |

| Continuous Validation | Runs autonomously in CI/CD with quality gates. | Enables daily/hourly deployments with confidence. |

| Risk-Based Testing | Prioritizes suites by code diffs, usage, and historical failure patterns. | Reduces defect leakage; optimizes execution time. |

How Agentic AI Enhances the SDLC?

| SDLC Stage | Traditional Testing | Agentic AI Approach |

| Requirements | Manual test design from PRDs/user stories. | Auto-generates tests from PRDs, Figma, and specs. |

| Development | Separate QA setup; manual updates to suites. | Agents trigger on commits; generate unit/integration tests. |

| Testing | Scripted execution; brittle locators; reactive fixes. | Self-healing execution; adaptive assertions; flaky test control. |

| Deployment | Manual sign-offs and smoke checks. | Autonomous quality gates with risk-based selection. |

| Maintenance | Ongoing script rework and locator updates. | Predictive optimization and continuous learning. |

Advantages of Agentic AI in Software Testing

- Higher Coverage: Agents generate new test cases from product changes or telemetry.

- Reduced Maintenance: Self-healing minimizes flaky tests.

- Faster Releases: Parallel, autonomous execution reduces QA bottlenecks.

- Intelligent Prioritization: Focuses on risk-prone areas first.

- Cross-Functional Accessibility: Developers, QAs, and PMs can define tests in natural language.

- Lower Long-Term Cost: Less human maintenance means lower TCO.

Traditional automation optimizes execution speed.

Agentic AI optimizes decision-making and coverage.

Traditional vs Agentic AI Testing — Comparison

| Feature | Traditional Automation | Agentic AI Testing |

| Test Authoring | Manual scripting by SDETs/testers. | Natural-language intent; autonomous generation. |

| Maintenance | High; frequent locator updates. | Low; self-healing selectors and flows. |

| Locator Dependence | XPath/CSS heavy; brittle. | Vision + semantic mapping; locator-independent. |

| Coverage | Limited to scripted paths. | Expands automatically with each release. |

| Learning | None. | Continuous improvement via feedback loops. |

| Test Execution | Rigid, pre-ordered suites. | Contextual, risk-based, autonomous. |

| Toolchain | Selenium/Appium/Cypress frameworks. | AI agents, RAG pipelines, orchestration APIs. |

| Human Role | Script writer & maintainer. | Domain validator & governance. |

| ROI Over Time | Declines with scale due to maintenance. | Compounds as learning reduces effort. |

| Ideal Environment | Stable UI; low change velocity. | Agile, cloud-native, CI/CD-driven products. |

Traditional vs Agentic in Web Testing

Traditional automation uses tools like Selenium or Playwright.

When a CSS ID changes, dozens of scripts fail.

Agentic AI testing uses semantic and visual detection — it identifies that the “Login” button is now “Sign In” through reasoning and screen parsing.

No script updates needed.

Result:

- Zero downtime for tests.

- No locator maintenance.

- Higher accuracy across browsers and devices.

How BotGauge Combines the Best of Both Worlds

BotGauge AQAAS (Autonomous QA as a Solution) blends traditional reliability with agentic intelligence — ideal for scaling teams that want results without building complex infrastructure.

BotGauge Capabilities

- Locator-independent testing (no XPath or selector pain).

- Plain-English test cases accessible to both QA and Dev teams.

- Built on RAG (Retrieval-Augmented Generation) — context-aware, not just prompt-based.

- Bulk test creation from PRDs, Figma, or demo videos.

- Unlimited executions and parallel runs — no usage fees.

- Human expert verification for critical test results.

- SOC 2 Type II compliance for enterprise security.

This hybrid model ensures your QA can evolve intelligently — without downtime, new hires, or tool migration.

Explore details → Pricing Plans or Contact Us to start your pilot.

When to Use What

| Scenario | Traditional Automation | Agentic AI Testing |

| Stable, legacy systems | ✅ Good fit | ⚪ Optional |

| Rapid product changes | ⚠️ High maintenance | ✅ Ideal |

| Limited technical QA team | ⚠️ High learning curve | ✅ Easier adoption |

| Regulatory compliance | ✅ Transparent scripted steps | ✅ With human oversight & audit logs |

| Fast CI/CD cycles | ⚠️ Manual sync and gating | ✅ Continuous, risk-based gating |

| Budget optimization (TCO) | ⚠️ Costs grow with maintenance | ✅ Lower TCO over time |

Conclusion

Software testing is entering its intelligent era.

Traditional test automation improved speed — but agentic AI testing adds reasoning, adaptability, and autonomy.

For QA leaders, it’s not a matter of if but when to integrate AI into the testing lifecycle.

With BotGauge AI Agents, you get:

- Self-healing, locator-independent testing

- Domain-expert validation

- Zero maintenance, unlimited scalability

Transform your QA with BotGauge AQAAS – Autonomous, Adaptive, and Intelligent.

Deliver quality software at the speed your business demands.